The Future of Computer User Interface is Physics Based

In the early '70s the research lab of Xerox at Palo Alto has developed the so called "WIMP user interface": Windows, Icons, Menu and Pointer. It took almost 15 years until Apple started to integrate such functionalities in the first Macintosh, and couple of years later Microsoft's Windows started to use the same metaphors.

Today's operating systems still use the same GUI which is based on the ideas already available in the '90s. But computer resources are growing exponentially -- couldn't we use this extra computing power for a better desktop experience? We could. The following projects are such initiatives, and their eye candy demo videos are definitely worth watching.

XGL for Linux

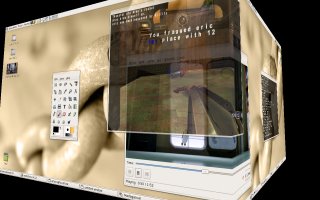

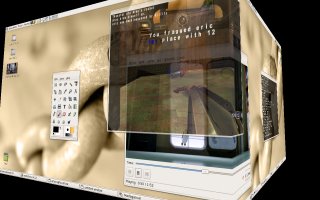

Novell started a project called XGL in late 2004. Their goal is to create an X server architecture for Linux, which allows fancier, more intuitive user interaction. XGL puts different desktops on the sides of a cube. Switching between the desktop happens through rotating this cube. The other features stay in the realm of 2D, but include nice effects: windows deform like jelly rectangles when one moves them around; transparency of windows can be fine-tuned, etc. Check out this video for a short demo, or burn a kororaa live CD to try it out yourself. Now, XGL does not bring revolutionary things, but it enhances current GUI which starts to behave much more physics based, and it is a bit less abstract.

Click here to view the XGL demo video

Click here to view the XGL demo video

Project Looking Glass

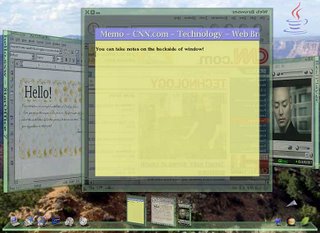

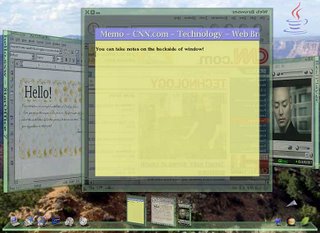

A similar project was started by Sun, as well. Not surprisingly, Project Looking Glass is based on Java Technology, and its aim is to enrich the desktop experience with 3D elements. One can, for example, tilt windows and put them on the side. A neat function is that one can turn around windows and attach notes to the back. Some other features include: a jukebox-like music browser, control over the translucency of windows. You can view the video of Jonathan Schwartz presenting a short demo of Project Looking Glass here. Again, we did not see fundamental innovations how we interact with the computer, but mostly neat enhancements were introduced to improve the user experience.

Click here to view the Looking Glass demo video

Click here to view the Looking Glass demo video

BumpTop - real physics

Research communities strive to reinvent the way people interact with computers, as well. Anand Agarawala from the University of Toronto's Dynamic Graphics Project had a really cool master's project: BumpTop. He created a 3D computer desktop using a real physics engine, AGEIA PhysX SDK. Anand thinks the computer desktop should simulate the real desktop as well as possible, and he goes as far as trying to simulate even the untidiness of a real desktop. Of course, BumpTop supports a lot of smart functions: One can drag, throw around icons which collide and behave exactly as one expects in real life. After organizing icons into piles you can lay them on a regular grid, browse through them with a fisheye function or leaf through the pile exactly like you would with a real life book. Dozens of other functions are available, but what is the most important is that all of them resemble to real-life manipulations. He has a very thorough video presenting the features of BumpTop. Moreover, according to his website, he is planning to build a full blown desktop replacement that can be installed on any machine.

Click here to view the BumpTop demo video

Click here to view the BumpTop demo video

But it does not take teams of research and state-of-the art physics engines to enrich the desktop environment. Kristian Høgsberg implemented a small and simple physics engine, Akamaru and created a physics based Mac OS X-like dock for Gnome. This short video demonstrates what a single programmer can do with a bit of programming time invested.

Conclusions

All the projects presented above have at least one thing in common: physics simulations. They use simulation of real-life world in order to bring something to the user which is less abstract and it is closer to the world we are used to.

The question is: Will users the users accept it? Innovating user interfaces is not an easy task. Users don't want to learn a lot of new things in order to use the computer, so there will be always a resistance toward new GUIs. One way to go around this problem is by not making drastic changes (like XGL and Project Glass), thus allowing an easier transition. Microsoft has learned this the hard way, through Microsoft Bob. So, this time the Redmond-based company takes smaller steps with the upcoming Vista operating system: Windows Aero. According to this demo video, they introduce smooth transitions, improved switching between windows, but nothing too radical.

Even if one makes a bigger leap (like BumpTop) the first and foremost criteria for success is that the new system should offer improved usability. Joel Spolsky defined usability in a single sentence: Something is usable if it behaves exactly as expected. But what could look more natural than the physics of the world we live in? So, if we accept this definition, these projects are definitely on the right track to get closer of tomorrow's mainstream desktop environment. I predict that there is a still long way ahead of us, but maybe in a couple of years Microsoft or Apple makes the step, just like in the '80s Steve Jobs went to Xerox's research center and came back with a new user interface.

---

Thanks to Gabor Cselle for reviewing drafts of this.

Today's operating systems still use the same GUI which is based on the ideas already available in the '90s. But computer resources are growing exponentially -- couldn't we use this extra computing power for a better desktop experience? We could. The following projects are such initiatives, and their eye candy demo videos are definitely worth watching.

XGL for Linux

Novell started a project called XGL in late 2004. Their goal is to create an X server architecture for Linux, which allows fancier, more intuitive user interaction. XGL puts different desktops on the sides of a cube. Switching between the desktop happens through rotating this cube. The other features stay in the realm of 2D, but include nice effects: windows deform like jelly rectangles when one moves them around; transparency of windows can be fine-tuned, etc. Check out this video for a short demo, or burn a kororaa live CD to try it out yourself. Now, XGL does not bring revolutionary things, but it enhances current GUI which starts to behave much more physics based, and it is a bit less abstract.

Click here to view the XGL demo video

Click here to view the XGL demo videoProject Looking Glass

A similar project was started by Sun, as well. Not surprisingly, Project Looking Glass is based on Java Technology, and its aim is to enrich the desktop experience with 3D elements. One can, for example, tilt windows and put them on the side. A neat function is that one can turn around windows and attach notes to the back. Some other features include: a jukebox-like music browser, control over the translucency of windows. You can view the video of Jonathan Schwartz presenting a short demo of Project Looking Glass here. Again, we did not see fundamental innovations how we interact with the computer, but mostly neat enhancements were introduced to improve the user experience.

Click here to view the Looking Glass demo video

Click here to view the Looking Glass demo videoBumpTop - real physics

Research communities strive to reinvent the way people interact with computers, as well. Anand Agarawala from the University of Toronto's Dynamic Graphics Project had a really cool master's project: BumpTop. He created a 3D computer desktop using a real physics engine, AGEIA PhysX SDK. Anand thinks the computer desktop should simulate the real desktop as well as possible, and he goes as far as trying to simulate even the untidiness of a real desktop. Of course, BumpTop supports a lot of smart functions: One can drag, throw around icons which collide and behave exactly as one expects in real life. After organizing icons into piles you can lay them on a regular grid, browse through them with a fisheye function or leaf through the pile exactly like you would with a real life book. Dozens of other functions are available, but what is the most important is that all of them resemble to real-life manipulations. He has a very thorough video presenting the features of BumpTop. Moreover, according to his website, he is planning to build a full blown desktop replacement that can be installed on any machine.

Click here to view the BumpTop demo video

Click here to view the BumpTop demo videoBut it does not take teams of research and state-of-the art physics engines to enrich the desktop environment. Kristian Høgsberg implemented a small and simple physics engine, Akamaru and created a physics based Mac OS X-like dock for Gnome. This short video demonstrates what a single programmer can do with a bit of programming time invested.

Conclusions

All the projects presented above have at least one thing in common: physics simulations. They use simulation of real-life world in order to bring something to the user which is less abstract and it is closer to the world we are used to.

The question is: Will users the users accept it? Innovating user interfaces is not an easy task. Users don't want to learn a lot of new things in order to use the computer, so there will be always a resistance toward new GUIs. One way to go around this problem is by not making drastic changes (like XGL and Project Glass), thus allowing an easier transition. Microsoft has learned this the hard way, through Microsoft Bob. So, this time the Redmond-based company takes smaller steps with the upcoming Vista operating system: Windows Aero. According to this demo video, they introduce smooth transitions, improved switching between windows, but nothing too radical.

Even if one makes a bigger leap (like BumpTop) the first and foremost criteria for success is that the new system should offer improved usability. Joel Spolsky defined usability in a single sentence: Something is usable if it behaves exactly as expected. But what could look more natural than the physics of the world we live in? So, if we accept this definition, these projects are definitely on the right track to get closer of tomorrow's mainstream desktop environment. I predict that there is a still long way ahead of us, but maybe in a couple of years Microsoft or Apple makes the step, just like in the '80s Steve Jobs went to Xerox's research center and came back with a new user interface.

---

Thanks to Gabor Cselle for reviewing drafts of this.

7 Comments:

I think this physics-centred approach for interaction is misguided. The whole point about reality is that it is messy, it is a hassle, that's why we use computers. Computers are symbolic machines, the power inherent in them is not that they can behave like the real world (otherwise why not have a normal desktop with folders and pencils and such?) but that they can treat information in the abstract, according to rules that need have nothing to do with reality. That's precisely what makes computers a useful tool, not that they can work as typewriters or photocopiers. The higher the abstraction, the less one has to deal with incidentals, the more one can deal with the essential complexity of the domain. For instance, when searching, one could simmulate the way we search in the real world, and flip pages one by one. Or we could use regular expressions, or incremental search. Intuitively, flipping pages is a lot easier to comprehend, but regular expressions and incremental search are far more powerful and productive. This whole idea of point-and-click is a retrogression, converting mental workers into physical workers. Instead of dealing with symbols, deal with representations of the symbols. Sure, it may be easier at first, but I don't see the point at all.

Not saying that the CLI is necessarily the best interface always, just saying that the greater the abstraction, the more linguistic and symbolic an interface is versus the more metaphoric and representational, the more power and productivity it can confer to an experienced user.

I completely agree that one of the greatest advantages of the computers are that they are so abstract and symbolic.

But nowadays computers are all around the place, and I do not know how many of all computer users know regular expression. The big mass of users mostly just chat, email, write some texts. I expect that they would enjoy something which looks natural. (maybe physics based).

Of course, one does not have to simulate everything. And it is difficult to get the right mixture (see the Microsoft Bob flop).

The future will tell us, but I think anyway changes in GUIs take a lot of time.

Another OS being researched on is the work of Jef Raskin and the project he left behind called Archy. Check it out at http://raskincenter.org/ ... It used to be called the Humane Interface.

As for the use of physics or naturalism for future interfaces, I really don't see this as a departure from what we have today. it is just that as the computer power becomes higher, the metaphor fidelity increases as well.

I basically don't see this as a leap. There are still objects to grab and then manipulate in all the models presented.

The Archy project in my mind thinks about not the metaphoric layer in the OS so much as the ways we manipulate those objects and to me this is REALLY where the rubber hits the road in making systems better.

Dave, thanks for the link! I will check out how Archy works.

You're right, the methaphors actually do not change too much. The way I see it, this flat screen combined with the mouse is a certainly a "limitation" to what one can do regarding the user interface.

Ultimately, the examples shown here are impressive at an 'eye candy' level (the importance of which should not be entirely dismissed) but don't hold much hope of improving productivity or usability.

BumpTop for me is the most impressive, especially as thought has gone into new interactions/idioms to interact with the objects (e.g. the lassoo behaviour).

However, something like BumpTop might be more commercially effective as a 'workspace' of some kind for a specific application rather than a general purpose Desktop. Somewhere to temporarily work when you need to scan and sort lots of files, e.g. might be a good workspace for playing around with a virtual photo album, or perhaps comparing/contrasting different accounts documents.

I agree that the whole phsycics thing is a misleading tangent. We should be going back to understanding the basic goals that a Desktop might fulfil. As the 'main' screen of most common interfaces, it surely can do much more than just be a place to put icons.

One idea would be to have an 'aggregator' style page (perhaps just a simple text page) that gives you important information as soon as your computer finishes loading:

E.g. immediately show your unread e-mails, appointments, files you worked on yesterday, notes you left on finishing work the night before, RSS digests from feeds you subscribe to, etc.

The problem as I see it isn't that we need a cleverer type of window, but rather that computing has gotten itself stuck in an iconic portrayal of hard disk architecture in the form of drive/folder/file.

Consider the recent and ongoing excitement about new and putatively more powerful desktop search utilities. In other words, the Next Big Thing in computing is (at least in part) thought to be a more efficient way of finding stuff after it's been lost rather than keeping it from getting lost in the first place.

Creating and managing information shouldn't be based on disk drive hardware and formatting. Physics-based modeling of hard drive architecture doesn't really fix the underlying problem.

I respect Joel and often agree with him, but I disagree with his definition of usability.

I expect Outlook to take forever to start, to have crappy searching, and to always ask me annoying questions about where on disk I want my messages stored. Is it more usable for living down to my expectations? No.

Going back 15 years, all the Windows users I knew expected to have to reboot 3 times a day. Was Windows more usable for behaving as expected? Hardly.

As a user interface designer, one of the coolest things is sitting down a new user in front of an application, and seeing him observe the program do something *better* than he expected, and say "oh, neat! I didn't expect that! that's really helpful!".

One of the most insightful things I've ever heard was "In order to *improve* the user interface, we have to *change* the user interface". The only way to make something behave *exactly* as expected is to change exactly nothing. I think we can all agree that this would not yield better user interfaces.

Part of UI design is making new features discoverable. The very use of the word "discoverable" flies in the face of Joel's definition: if he didn't know about it, it sure ain't expected!

Post a Comment

<< Home